You may have done unit testing or heard the term unit test, which involves breaking down your code into smaller units and testing them to see if they are producing the correct output.

Python has a robust unit testing library called unittest that provides a comprehensive set of testing features. However, some developers believe that unittest is more verbose than other testing frameworks.

In this article, you’ll look at how to use the pytest library to create small, concise test cases for your code. Throughout the process, you’ll learn about the pytest library’s key features.

Installation

Pytest is a third-party library that must be installed in your project environment. In your terminal window, type the following command.

|

1 |

pip install pytest |

Pytest has been installed in your project environment, and all of its functions and classes are now available for use.

Getting Started With Pytest

Before getting into what pytest can do, let’s take a look at how to use it to test the code.

Here’s a Python file test_square.py that contains a square function and a test called test_answer.

|

1 2 3 4 5 6 7 |

# test_square.py def square(num): return num**2 def test_answer(): assert square(3) == 10 |

To run the above test, simply enter the pytest command into your terminal, and the rest will be handled by the pytest library.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

D:\SACHIN\Pycharm\pytestt_lib>pytest ========================================= test session starts ========================================== platform win32 -- Python 3.10.5, pytest-7.3.2, pluggy-1.0.0 rootdir: D:\SACHIN\Pycharm\pytestt_lib plugins: anyio-3.6.2 collected 1 item test_square.py F [100%] =============================================== FAILURES =============================================== _____________________________________________ test_answer ______________________________________________ def test_answer(): > assert square(3) == 10 E assert 9 == 10 E + where 9 = square(3) test_square.py:7: AssertionError ======================================= short test summary info ======================================== FAILED test_square.py::test_answer - assert 9 == 10 ========================================== 1 failed in 0.27s =========================================== |

The above test failed, as evidenced by the output generated by the pytest library. You might be wondering how pytest discovered and ran the test when no arguments were passed.

This occurred because pytest uses standard test discovery. This includes the conventions that must be followed for testing to be successful.

- When no argument is specified, pytest searches files that are in *_test.py or test_*.py format.

- Pytest collects test functions and methods that are prefixed with

test, as well astestprefixed test functions and modules insideTestprefixed test classes that do not have a__init__method, from these files. - Pytest also finds tests in subdirectories, making it simple to organize your tests within the context of your project structure.

Why do Most Prefer pytest?

If you’ve used the unittest library before, you’ll know that even writing a small test requires more code than pytest. Here’s an example to demonstrate.

Assume you want to write a unittest test suite to test your code.

|

1 2 3 4 5 6 7 8 9 10 11 12 |

# test_unittest.py import unittest class TestWithUnittest(unittest.TestCase): def test_query(self): sentence = "Welcome to GeekPython" self.assertTrue("P" in sentence) self.assertFalse("e" in sentence) def test_capitalize(self): self.assertEqual("geek".capitalize(), "Geek") |

Now, from the command line, run these tests with unittest.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

D:\SACHIN\Pycharm\pytestt_lib>python -m unittest test_unittest.py .F ====================================================================== FAIL: test_query (test_unittest.TestWithUnittest) ---------------------------------------------------------------------- Traceback (most recent call last): File "D:\SACHIN\Pycharm\pytestt_lib\test_unittest.py", line 9, in test_query self.assertFalse("e" in sentence) AssertionError: True is not false ---------------------------------------------------------------------- Ran 2 tests in 0.001s FAILED (failures=1) |

As you can see, the test_query test failed while the test_capitalize test passed, as expected by the code.

However, writing those tests requires more lines of code, which include:

- Importing the

unittestmodule. - A test class (

TestWithUnittest) is created by subclassingTestCase. - Making assertions with unittest’s assert methods (

assertTrue,assertFalse, andassertEqual).

However, this is not the case with pytest, if you wrote those tests with pytest, they must look like this:

|

1 2 3 4 5 6 7 8 9 10 |

# test_pytest.py def test_query(): sentence = "Welcome to GeekPython" assert "P" in sentence assert "e" not in sentence def test_capitalize(): assert "geek".capitalize(), "Geek" |

It’s as simple as that, there’s no need to import the package or use pre-defined assertion methods. With a detailed description, you will get a nicer output.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 |

D:\SACHIN\Pycharm\pytestt_lib>pytest ====================================================================== test session starts ======================================================================= platform win32 -- Python 3.10.5, pytest-7.3.2, pluggy-1.0.0 rootdir: D:\SACHIN\Pycharm\pytestt_lib plugins: anyio-3.6.2 collected 2 items test_pytest.py F. [100%] ============================================================================ FAILURES ============================================================================ ___________________________________________________________________________ test_query ___________________________________________________________________________ def test_query(): sentence = "Welcome to GeekPython" assert "P" in sentence > assert "e" not in sentence E AssertionError: assert 'e' not in 'Welcome to GeekPython' E 'e' is contained here: E Welcome to GeekPython E ? + test_pytest.py:6: AssertionError ==================================================================== short test summary info ===================================================================== FAILED test_pytest.py::test_query - AssertionError: assert 'e' not in 'Welcome to GeekPython' ================================================================== 1 failed, 1 passed in 0.31s =================================================================== |

The following information can be found in the output:

- The platform on which the test is run, the library versions used, the root directory where the test files are stored, and the plugins used.

- The Python file from the test, in this case,

test_pytest.py, was collected. - The test result, which is a

"F"and a dot (.). An"F"indicates a failed test, a dot (.) indicates a passed test, and a"E"indicates an unexpected condition that occurred during testing. - Finally, a test summary, which prints the results of the tests.

Parametrize Tests

What exactly is parametrization? Parametrization is the process of running multiple sets of tests on the same test function or class, each with a different set of parameters or arguments. This allows you to test the expected results of different input values.

If you want to write multiple tests to evaluate various arguments for the square function, your first thought might be to write them as follows:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

# Function to return the square of specified number def square(num): return num ** 2 # Evaluating square of different numbers def test_square_of_int(): assert square(5) == 25 def test_square_of_float(): assert square(5.2) == 27.04 def test_square_of_complex_num(): assert square(5j+5) == 50j def test_square_of_string(): assert square("5") == "25" |

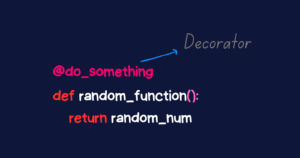

But wait, there’s a twist, pytest saves you from writing even more boilerplate code. To allow the parametrization of arguments for a test function, pytest provides the @pytest.mark.parametrize decorator.

Using parametrization, you can eliminate code duplication and significantly reduce your test code.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

import pytest def square(num): return num ** 2 @pytest.mark.parametrize("num, expected", [ (5, 25), (5.2, 27.04), (5j + 5, 50j), ("5", "25") ]) def test_square(num, expected): assert square(num) == expected |

The @pytest.mark.parametrize decorator defines four different ("num, expected") tuples in the preceding code. The test_square test function will execute each of them one at a time, and the test report will be generated by determining whether the num evaluated is equal to the expected value.

Pytest Fixtures

Using pytest fixtures, you can avoid duplicating setup code across multiple tests. By defining a function with the @pytest.fixture decorator, you create a reusable setup that can be shared across multiple test functions or classes.

In testing, a fixture provides a defined, reliable, and consistent context for the tests. This could include environment (for example a database configured with known parameters) or content (such as a dataset). Source

Here’s an example of when you should use fixtures. Assume you have a continuous stream of dynamic vehicle data and want to write a function collect_vehicle_number_from_delhi() to extract vehicle numbers belonging to Delhi.

|

1 2 3 4 5 6 7 8 |

# fixtures_pytest.py def collect_vehicle_number_from_delhi(vehicle_detail): data_collected = [] for item in vehicle_detail: vehicle_number = item.get("vehicle_number", "") if "DL" in vehicle_number: data_collected.append(f"{vehicle_number}") return data_collected |

To check whether the function works properly, you would write a test that looks like the following:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 |

# test_pytest_fixture.py from fixtures_pytest import collect_vehicle_number_from_delhi def test_collect_vehicle_number_from_delhi(): vehicle_detail = [ { "category": "Car", "vehicle_number": "DL04R1441" }, { "category": "Bike", "vehicle_number": "HR04R1441" }, { "category": "Car", "vehicle_number": "DL04R1541" } ] expected_result = [ "DL04R1441", "DL04R1541" ] assert collect_vehicle_number_from_delhi(vehicle_detail) == expected_result |

The test function test_collect_vehicle_number_from_delhi() above determines whether or not the collect_vehicle_number_from_delhi() function extracts the data as expected. Now you might want to extract the vehicle number belonging to another state, then you will write another function collect_vehicle_number_from_haryana().

|

1 2 3 4 5 6 7 8 9 10 11 |

# fixtures_pytest.py def collect_vehicle_number_from_delhi(vehicle_detail): # Remaining code def collect_vehicle_number_from_haryana(vehicle_detail): data_collected = [] for item in vehicle_detail: vehicle_number = item.get("vehicle_number", "") if "HR" in vehicle_number: data_collected.append(f"{vehicle_number}") return data_collected |

Following the creation of this function, you will create another test function and repeat the process.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 |

# test_pytest_fixture.py from fixtures_pytest import collect_vehicle_number_from_haryana def test_collect_vehicle_number_from_haryana(): vehicle_detail = [ { "category": "Car", "vehicle_number": "DL04R1441" }, { "category": "Bike", "vehicle_number": "HR04R1441" }, { "category": "Car", "vehicle_number": "DL04R1541" } ] expected_result = [ "HR04R1441" ] assert collect_vehicle_number_from_haryana(vehicle_detail) == expected_result |

This is analogous to repeatedly writing the same code. To avoid writing the same code multiple times, create a function decorated with @pytest.fixture here.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 |

# test_pytest_fixture.py import pytest from fixtures_pytest import collect_vehicle_number_from_haryana from fixtures_pytest import collect_vehicle_number_from_delhi @pytest.fixture def vehicle_data(): return [ { "category": "Car", "vehicle_number": "DL04R1441" }, { "category": "Bike", "vehicle_number": "HR04R1441" }, { "category": "Car", "vehicle_number": "DL04R1541" } ] # test 1 def test_collect_vehicle_number_from_delhi(vehicle_data): expected_result = [ "DL04R1441", "DL04R1541" ] assert collect_vehicle_number_from_delhi(vehicle_data) == expected_result # test 2 def test_collect_vehicle_number_from_haryana(vehicle_data): expected_result = [ "HR04R1441" ] assert collect_vehicle_number_from_haryana(vehicle_data) == expected_result |

As you can see from the code above, the number of lines has been reduced to some extent, and you can now write a few more tests by reusing the @pytest.fixture decorated function vehicle_data.

Fixture for Database Connection

Consider the example of creating a database connection, in which a fixture is used to set up the resources and then tear them down.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 |

# fixture_for_db_connection.py import pytest import sqlite3 @pytest.fixture def database_connection(): # Setup Phase conn = sqlite3.connect(":memory:") cur = conn.cursor() cur.execute( "CREATE TABLE users (name TEXT)" ) # Freeze the state and pass the object to test function yield conn # Teardown Phase conn.close() |

A fixture database_connection() is created, which creates an SQLite database in memory and establishes a connection, then creates a table, yields the connection, and finally closes the connection once the work is completed.

This fixture can be passed as an argument to the test function. Assume you want to write a function to insert a value into a database, simply do the following:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

# fixture_for_db_connection.py def test_insert_data(database_connection): database_connection.execute( "INSERT INTO users (name) VALUES ('Virat Kohli')" ) res = database_connection.execute( "SELECT * FROM users" ) result = res.fetchall() assert result is not None assert ("Virat Kohli",) in result |

The test_insert_data() test function takes the database_connection fixture as an argument, which eliminates the need to rewrite the database connection code.

You can now write as many test functions as you want without having to rewrite the database setup code.

Markers in Pytest

Pytest provides a few built-in markers to mark your test functions which can be handy while testing.

In the earlier section, you saw the parametrization of arguments using the @pytest.mark.parametrize decorator. Well, @pytest.mark.parametrize is a decorator that marks a test function for parametrization.

Skipping Tests

If you have a test function that you want to skip during testing for some reason, you can decorate it with @pytest.mark.skip.

In the test_pytest_fixture.py script, for example, you added two new test functions but want to skip testing them because you haven’t yet created the collect_vehicle_number_from_punjab() and collect_vehicle_number_from_maharashtra() functions to pass these tests.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

# test_pytest_fixture.py # Previous code here @pytest.mark.skip(reason="Not implemented yet") def test_collect_vehicle_number_from_punjab(vehicle_data): expected_result = [ "PB3SQ4141" ] assert collect_vehicle_number_from_punjab(vehicle_data) == expected_result @pytest.mark.skip(reason="Not implemented yet") def test_collect_vehicle_number_from_maharashtra(vehicle_data): expected_result = [ "MH05X1251" ] assert collect_vehicle_number_from_maharashtra(vehicle_data) == expected_result |

Both test functions in this script are marked with @pytest.mark.skip and provide a reason for skipping. When you run this script, pytest will bypass these tests.

|

1 2 3 4 5 6 7 8 9 10 |

D:\SACHIN\Pycharm\pytestt_lib>pytest test_pytest_fixture.py ====================================================================== test session starts ======================================================================= platform win32 -- Python 3.10.5, pytest-7.3.2, pluggy-1.0.0 rootdir: D:\SACHIN\Pycharm\pytestt_lib plugins: anyio-3.6.2 collected 4 items test_pytest_feature.py ..ss [100%] ================================================================== 2 passed, 2 skipped in 0.05s ================================================================== |

The report shows that two tests were passed and two were skipped.

If you want to conditionally skip a test function. In that case, use the @pytest.mark.skipif decorator to mark the test function. Here’s an illustration.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 |

# test_pytest_fixture.py # Previous code here @pytest.mark.skipif( pytest.version_tuple < (7, 2), reason="pytest version is less than 7.2" ) def test_collect_vehicle_number_from_punjab(vehicle_data): expected_result = [ "PB3SQ4141" ] assert collect_vehicle_number_from_punjab(vehicle_data) == expected_result @pytest.mark.skipif( pytest.version_tuple < (7, 2), reason="pytest version is less than 7.2" ) def test_collect_vehicle_number_from_karnataka(vehicle_data): expected_result = [ "KR3SQ4141" ] assert collect_vehicle_number_from_karnataka(vehicle_data) == expected_result |

In this example, two test functions (test_collect_vehicle_number_from_punjab and test_collect_vehicle_number_from_karnataka) are decorated with @pytest.mark.skipif. The condition specified in each case is pytest.version_tuple < (7, 2), which means that these tests will be skipped if the installed pytest version is less than 7.2. The reason parameter provides a message explaining why the tests are being skipped.

Filter Warnings

You can add warning filters to specific test functions or classes using the @pytest.mark.filterwarnings function, allowing you to control which warnings are captured during tests.

Here’s an example of the code from above.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

# test_pytest_fixture.py import warnings # Previous code here # Helper warning function def warning_function(): warnings.warn("Not implemented yet", UserWarning) @pytest.mark.filterwarnings("error:Not implemented yet") def test_collect_vehicle_number_from_punjab(vehicle_data): warning_function() expected_result = ["PB3SQ4141"] assert collect_vehicle_number_from_punjab(vehicle_data) == expected_result @pytest.mark.filterwarnings("error:Not implemented yet") def test_collect_vehicle_number_from_karnataka(vehicle_data): warning_function() expected_result = ["KR3SQ4141"] assert collect_vehicle_number_from_karnataka(vehicle_data) == expected_result |

In this example, a warning message is emitted by a helper warning function (warning_function()).

Both test functions (test_collect_vehicle_number_from_punjab and test_collect_vehicle_number_from_karnataka) are decorated with @pytest.mark.filterwarnings which specifies that any UserWarning with the message “Not implemented yet” should be treated as an error during the execution of these tests.

These test functions call warning_function which, in turn, emits a UserWarning with the specified message.

You can see in the summary of the report generated by pytest, the warning is displayed.

|

1 2 3 4 |

==================================================================== short test summary info ===================================================================== FAILED test_pytest_feature.py::test_collect_vehicle_number_from_punjab - UserWarning: Not implemented yet FAILED test_pytest_feature.py::test_collect_vehicle_number_from_karnataka - UserWarning: Not implemented yet ======================================================================= 2 failed in 0.31s ======================================================================== |

Pytest Command-line Options

Pytest provides numerous command-line options that allow you to customize or extend the behavior of test execution. You can list all the available pytest options using the following command in your terminal.

|

1 |

pytest --help |

Here are some pytest command-line options that you can try when you execute tests.

Running Tests Using Keyword

You can specify which tests to run by following the -k option with a keyword or expression. Assume you have the Python file test_sample.py, which contains the tests listed below.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 |

def square(num): return num**2 # Test 1 def test_special_one(): a = 2 assert square(a) == 4 # Test 2 def test_special_two(): x = 3 assert square(x) == 9 # Test 3 def test_normal_three(): x = 3 assert square(x) == 9 |

If you want to run tests that contain "test_special", use the following command.

|

1 2 3 4 5 6 7 8 9 10 |

D:\SACHIN\Pycharm\pytestt_lib>pytest -k test_special ====================================================================== test session starts ======================================================================= platform win32 -- Python 3.10.5, pytest-7.3.2, pluggy-1.0.0 rootdir: D:\SACHIN\Pycharm\pytestt_lib plugins: anyio-3.6.2 collected 3 items / 1 deselected / 2 selected test_sample.py .. [100%] ================================================================ 2 passed, 1 deselected in 0.07s ================================================================= |

The tests that have "test_special" in their name were selected, and the others were deselected.

If you want to run all other tests but not the ones with “test_special” in their names, use the following command.

|

1 2 3 4 5 6 7 8 9 10 |

D:\SACHIN\Pycharm\pytestt_lib>pytest -k "not test_special" ====================================================================== test session starts ======================================================================= platform win32 -- Python 3.10.5, pytest-7.3.2, pluggy-1.0.0 rootdir: D:\SACHIN\Pycharm\pytestt_lib plugins: anyio-3.6.2 collected 3 items / 2 deselected / 1 selected test_sample.py . [100%] ================================================================ 1 passed, 2 deselected in 0.06s ================================================================= |

The expression "not test_special" in the above command indicates that run only those tests that don’t have “test_special” in their name.

Customizing Output

You can use the following options to customize the output and the report:

-v,--verbose– Increases verbosity--no-header– Disables header--no-summary– Disables summary-q,--quiet– Decreases verbosity

Output of the tests with increased verbosity.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

D:\SACHIN\Pycharm\pytestt_lib>pytest -v test_sample.py ====================================================================== test session starts ======================================================================= platform win32 -- Python 3.10.5, pytest-7.3.2, pluggy-1.0.0 -- D:\SACHIN\Python310\python.exe cachedir: .pytest_cache rootdir: D:\SACHIN\Pycharm\pytestt_lib plugins: anyio-3.6.2 collected 3 items test_sample.py::test_special_one PASSED [ 33%] test_sample.py::test_special_two PASSED [ 66%] test_sample.py::test_normal_three PASSED [100%] ======================================================================= 3 passed in 0.04s ======================================================================== |

Output of the tests with decreased verbosity.

|

1 2 3 |

D:\SACHIN\Pycharm\pytestt_lib>pytest -q test_sample.py ... [100%] 3 passed in 0.02s |

When you use --no-header and --no-summary together, it is equivalent to using -q (decreased verbosity).

Test Collection

Using the --collect-only, --co option, pytest collects all the tests but doesn’t execute them.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 |

D:\SACHIN\Pycharm\pytestt_lib>pytest --collect-only test_sample.py ====================================================================== test session starts ======================================================================= platform win32 -- Python 3.10.5, pytest-7.3.2, pluggy-1.0.0 rootdir: D:\SACHIN\Pycharm\pytestt_lib plugins: anyio-3.6.2 collected 3 items <Module test_sample.py> <Function test_special_one> <Function test_special_two> <Function test_normal_three> =================================================================== 3 tests collected in 0.02s =================================================================== |

Ignore Path or File during Test Collection

If you don’t want to collect tests from a specific path or file, use the --ignore=path option.

|

1 2 3 4 5 6 7 8 9 10 |

D:\SACHIN\Pycharm\pytestt_lib>pytest --ignore=test_sample.py ====================================================================== test session starts ======================================================================= platform win32 -- Python 3.10.5, pytest-7.3.2, pluggy-1.0.0 rootdir: D:\SACHIN\Pycharm\pytestt_lib plugins: anyio-3.6.2 collected 1 item test_square.py . [100%] ======================================================================= 1 passed in 0.06s ======================================================================== |

The test_sample.py file is ignored by pytest during test collection in the above example.

Exit on First Failed Test or Error

When you use the -x, --exitfirst option, pytest exits the test execution on the first failed test or error that it finds.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

D:\SACHIN\Pycharm\pytestt_lib>pytest -x test_sample.py ====================================================================== test session starts ======================================================================= platform win32 -- Python 3.10.5, pytest-7.3.2, pluggy-1.0.0 rootdir: D:\SACHIN\Pycharm\pytestt_lib plugins: anyio-3.6.2 collected 3 items test_sample.py F ============================================================================ FAILURES ============================================================================ ________________________________________________________________________ test_special_one ________________________________________________________________________ def test_special_one(): a = 2 > assert square(a) == 5 E assert 4 == 5 E + where 4 = square(2) test_sample.py:6: AssertionError ==================================================================== short test summary info ===================================================================== FAILED test_sample.py::test_special_one - assert 4 == 5 !!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!! stopping after 1 failures !!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!!! ======================================================================= 1 failed in 0.35s ======================================================================== |

Pytest immediately exits the test execution when it finds a failed test and a stopping message appears in the report summary.

Conclusion

Pytest is a testing framework that allows you to write small and readable tests to test or debug your code.

In this article, you’ve learned:

- How to use

pytestfor testing your code - How to parametrize arguments to avoid code duplication

- How to use fixtures in

pytest - Pytest command-line options

🏆Other articles you might be interested in if you liked this one

✅Debug/Test your code using the unittest module in Python.

✅What is assert in Python and how to use it for debugging?

✅Create a WebSocket server and client in Python.

✅Create multi-threaded Python programs using a threading module.

✅Create and integrate MySQL database with Flask app using Python.

✅Upload and display images on the frontend using Flask.

That’s all for now

Keep Coding✌✌